Robots were supposed to be cooler by now. At least that’s what we were led to believe growing up. Remember the promise of humanoid helpers with blinking eyes, mechanical voices, and screens filled with mystery and charm? Somehow, we ended up with soulless chatbots spitting out generic images of Mickey Mouse and calling it innovation.

But someone out there still remembers what robots are supposed to feel like. And they’ve actually gone and built one.

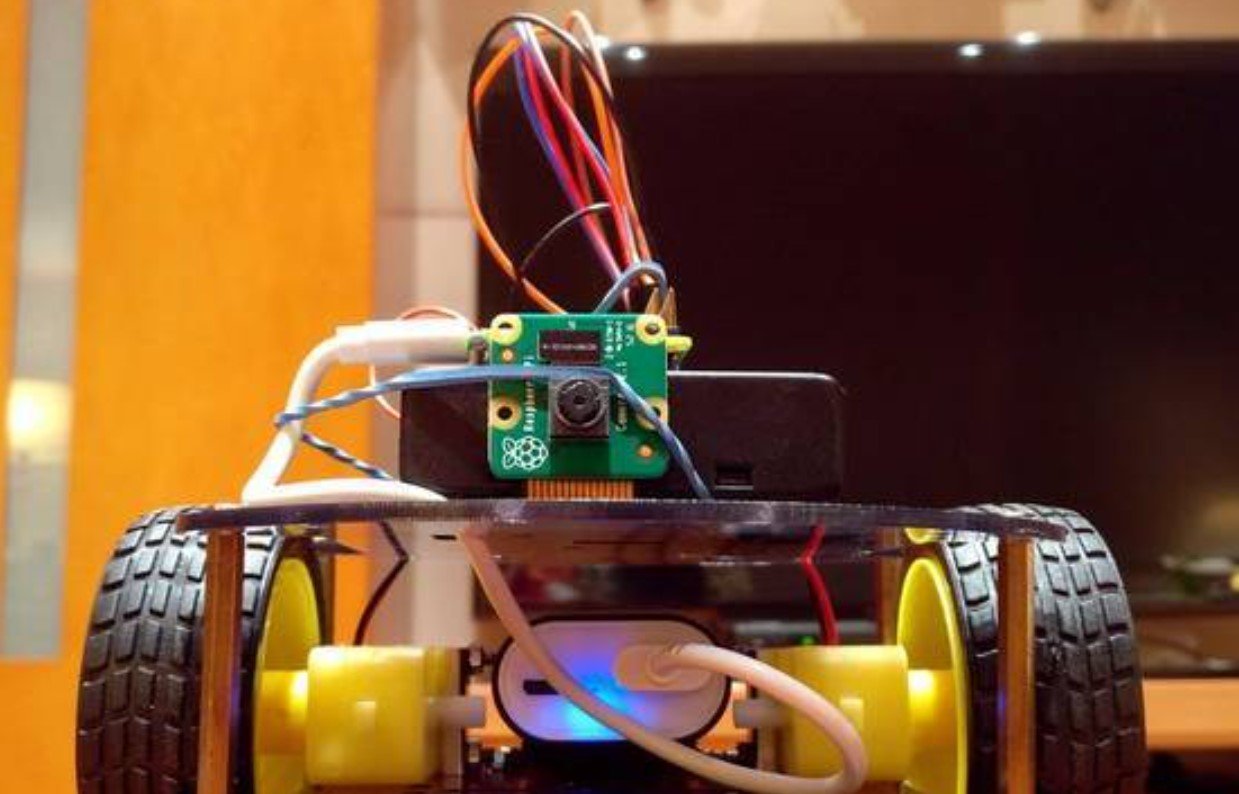

Meet TOMMY-B-003—a Raspberry Pi-powered animatronic that looks like it crawled out of a late-night rerun of Buck Rogers or Johnny Five. Created by a Reddit user going by the name “Exercising Ingenuity”, TOMMY-B-003 isn’t just a fun gimmick. It’s a small rebellion against the sanitized, overly-polished face of modern tech.

A Robot with Eyes, a Soul (Sort Of), and a CRT Screen

The robot was first shown off in a short post on the Raspberry Pi subreddit, and immediately caught the attention of retro-tech fans, hobbyists, and curious onlookers. The video shows TOMMY-B-003’s blinking artificial eyes following the viewer, a retro oscilloscope fluttering with every word, and a CRT screen doing… well, whatever old-school robot screens used to do. You know, just being there and looking important.

It’s more than aesthetics, though. There’s some legit tech going on under the hood. The robot is driven by a Raspberry Pi 4B, using OpenAI’s API to generate real-time, speech-to-speech interactions. It’s not just a puppet—it talks back.

Just don’t expect Siri or Alexa levels of polish. And that’s sort of the point.

Not Just Another DIY Project: It’s Personal

In a follow-up, Exercising Ingenuity explained their inspiration:

“Over the past year I built an interactive robot that tries to fulfill my childhood ideal of what a robot should be.”

That line hits hard for anyone raised on a steady diet of sci-fi and optimism. This isn’t just a Raspberry Pi project—it’s a love letter to a version of the future that never quite arrived.

The design builds on Thomas Burns’ Alexatron, but the soul of TOMMY-B-003 is all its own. The blinking eyes, the moving screen, the way it just… stares. Slightly unsettling? Maybe. But also kind of wonderful.

What Powers TOMMY-B-003?

So, what makes this bot tick? Here’s a breakdown of its core setup:

-

Raspberry Pi 4B: It’s the brain and heart of the whole system.

-

OpenAI real-time API: Used for speech interaction, making TOMMY feel less like a machine and more like a quirky companion.

-

Facial recognition: Yep, those eyes aren’t just blinking—they’re watching.

-

Animatronics: Motors and servos bring the head and expressions to life.

-

CRT + Oscilloscope: For pure retro flair and functional expression.

It’s not just functional, it’s expressive. The moving oscilloscope gives TOMMY a voice. The CRT adds a face. It’s all just the right mix of tech and nostalgia.

Could a Raspberry Pi Handle a Local LLM?

Here’s where it gets tricky. Exercising Ingenuity has plans to take things even further—by squeezing a large language model (LLM) onto the Pi itself. That’s bold.

Why? Because local LLMs are memory-hungry, CPU-thirsty beasts. And Raspberry Pi, for all its usefulness, isn’t exactly a powerhouse. There’s a reason most Pi-based AI tools rely on cloud processing. Local inference is hard. Very hard.

Still, it’s been attempted before—with mixed, often terrifying results. There was even an “art piece” meant to explore just how bad the output gets when you force an LLM to run on such modest hardware.

If TOMMY-B-003 does end up with a locally-run LLM, don’t expect poetic dialogue. Expect weird pauses, glitchy responses, and that awkward feeling that your robot friend is thinking just a little too hard.

And weirdly… that might make it even better.

Robot Companions Aren’t Dead—They’re Just Hidden in Garages

It’s tempting to dismiss TOMMY-B-003 as just a fun little side project. But it taps into something deeper—something we’ve lost. Robots used to spark the imagination. They weren’t productivity tools. They weren’t SEO machines. They were characters. Personalities. Companions.

Now? Most “robots” are voice assistants that pretend to be helpful but can’t handle anything outside pre-set tasks. And let’s not even talk about the deluge of AI-generated images flooding social feeds. Nobody asked for that.

Sometimes, it feels like modern robots forgot how to be fun.

But not TOMMY-B-003.

One Reddit user summed it up best: “I’d actually use Copilot if it looked like this.” That’s not a joke—it’s a statement. A strange-looking robot with blinking eyes and a CRT screen feels more trustworthy than a floating digital assistant logo.

Nostalgia Meets Ingenuity in a Curious New Way

TOMMY-B-003 doesn’t just revive old-school aesthetics—it makes a statement. That our childhood dreams of robots weren’t wrong. They were just… waiting for the right hobbyist to bring them back.

Here’s how TOMMY stacks up in comparison to today’s usual AI suspects:

| Feature | TOMMY-B-003 | Modern Voice Assistant |

|---|---|---|

| Eyes that track you | ✅ Yes | ❌ No |

| CRT/Physical Display | ✅ Yes | ❌ No screen |

| Personality | ✅ Quirky and retro | 🤖 Corporate and bland |

| Offline capability | 🚧 In development | ❌ Not without internet |

| Emotional appeal | ✅ High | 💤 Low |

Sure, TOMMY doesn’t fetch your groceries or run your calendar. But he stares at you like he knows something you don’t. He blinks. He thinks (or tries to). And that might be more valuable than a thousand perfect Siri replies.